ChatGPT REST API node.js

This is an example of a serverless Node.js application that uses codehooks.io to create a REST API for the OpenAI ChatGPT language model. The API allows REST API clients to send text prompts to the ChatGPT model and receive a response in return.

The code uses the codehooks-js library to handle routing and middleware for the API, and the node-fetch library to make HTTP requests to the OpenAI API. The code also uses the built-in codehooks.io Key-Value store to cache the response from the OpenAI API for 60 seconds, in order to reduce the number of requests made to the OpenAI API (If you want to create your own Node.js Express backend, you just need to replace this cache with Redis for example).

Additionally, the code also implements a middleware to rate limit the number of requests coming from the same IP address to 10/min.

- Read more about the OpenAI API

- Read more about the Key-Value store

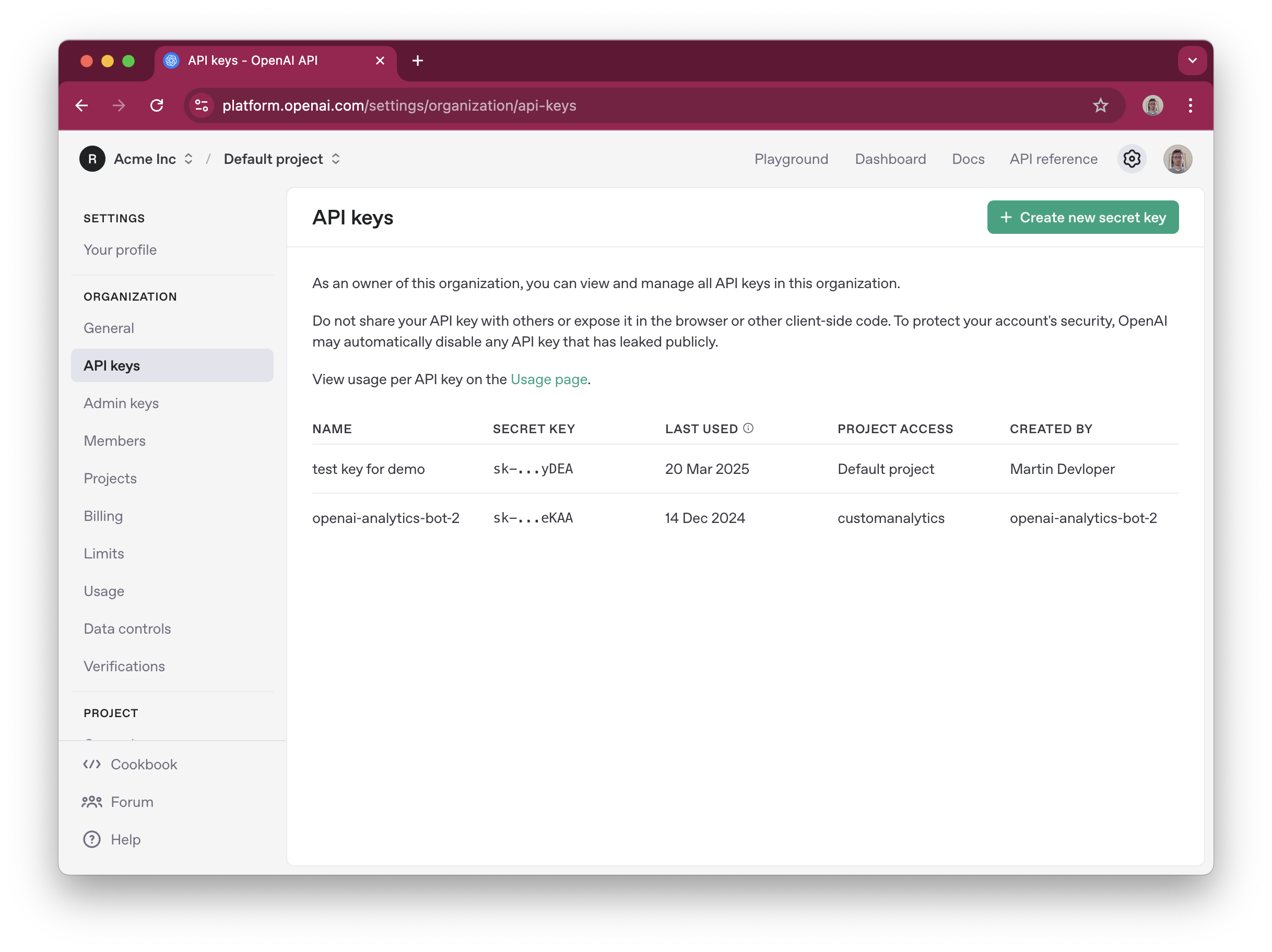

Create the OpenAI API key

Navigate to your Open AI account settings and create a new secret key.

Create a new project to host the GPT REST API

coho create gptrestapi

This will create a new directory for your REST API backend application.

cd gptrestapi

Copy the source code

import { app, Datastore } from 'codehooks-js';

import fetch from 'node-fetch';

// REST API routes

app.post('/chat', async (req, res) => {

if (!process.env.OPENAI_API_KEY) {

return res.status(500).json({ error: 'Missing OPENAI_API_KEY' }); // CLI command: coho set-env OPENAI_API_KEY 'XXX'

}

const { ask } = req.body;

if (!ask) {

return res.status(400).json({ error: 'Missing ask parameter' });

}

const db = await Datastore.open();

const cacheKey = `chatgpt_cache_${ask}`;

// Check cache first

const cachedAnswer = await db.get(cacheKey);

if (cachedAnswer) {

return res.json({ response: cachedAnswer });

}

try {

// Call OpenAI API

const response = await callOpenAiApi(ask);

const { choices } = response;

const text = choices?.[0]?.message?.content || 'No response';

// Cache response for 1 minute

await db.set(cacheKey, text, { ttl: 60 * 1000 });

res.json({ response: text });

} catch (error) {

console.error('OpenAI API error:', error);

res.status(500).json({ error: 'Failed to get response from OpenAI' });

}

});

// Call OpenAI API

async function callOpenAiApi(ask) {

const requestBody = {

model: 'gpt-4-turbo',

messages: [

{ role: 'system', content: 'You are a helpful AI assistant.' },

{ role: 'user', content: ask },

],

temperature: 0.6,

max_tokens: 1024,

};

const requestOptions = {

method: 'POST',

headers: {

'Content-Type': 'application/json',

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`,

},

body: JSON.stringify(requestBody),

};

const response = await fetch(

'https://api.openai.com/v1/chat/completions',

requestOptions

);

return response.json();

}

// Global middleware to IP rate limit traffic

app.use(async (req, res, next) => {

const db = await Datastore.open();

const ipAddress = req.headers['x-real-ip'] || req.ip;

// Increase count for IP

const count = await db.incr(`IP_count_${ipAddress}`, 1, { ttl: 60 * 1000 });

console.log('Rate limit:', ipAddress, count);

if (count > 10) {

return res.status(429).json({ error: 'Too many requests from this IP' });

}

next();

});

// Export app to the serverless runtime

export default app.init();

Open the index.js file and replace the code with the source code.

Install dependencies

npm install codehooks-js node-fetch

Create a new secret environment variable

Once you have created your Open AI API secret key, you need to add this to the serverless runtime. You can use the CLI command for this.

coho set-env OPENAI_API_KEY 'XXXXXXXX' --encrypted

Deploy the Node.js ChatGPT REST API to the codehooks.io backend

From the project directory run the CLI command coho deploy.

The output from my test project is shown below:

coho deploy

Project: gptrestapi-pwnj Space: dev

Deployed Codehook successfully! 🙌

Test your ChatGPT REST API

The following curl example demonstrates that we have a live REST API that takes a POST body with a text string ask and returns the response from the Open AI API.

curl -X POST \

'https://gptrestapi-pwnj.api.codehooks.io/dev/chat' \

--header 'x-apikey: cb17c77f-2821-489f-a6b4-fb0dae34251b' \

--header 'Content-Type: application/json' \

--data-raw '{

"ask": "Make a great title for a code example with ChatGPT and serverless node.js with codehooks.io"

}'

Output from the ChatGPT REST API is shown below.

"Building Conversational AI with ChatGPT and Serverless Node.js Using Codehooks.io"

Another example shows that we a actually talking to the Open AI API.

curl -X POST \

'https://gptrestapi-pwnj.api.codehooks.io/dev/chat' \

--header 'x-apikey: cb17c77f-2821-489f-a6b4-fb0dae34251b' \

--header 'Content-Type: application/json' \

--data-raw '{

"ask": "Tell a joke"

}'

Output from the second example 😂:

Q: What did the fish say when it hit the wall?

A: Dam!

The source code of this and other examples are also available on our GitHub example repo.